Speech recognition instructions and troubleshooting during research

Last updated: June 6, 2025

Some users with mobility issues use speech recognition applications (also known as “voice command” or “voice to text”) to interact with their desktop and mobile devices. This page explains:

How speech recognition apps work

What challenges research participants may face during sessions

How to troubleshoot these challenges

About speech recognition apps

Speech recognition apps perform actions based on a user’s spoken instructions. Examples of what these apps can do include:

Dictating notes or transcribing meetings and audio files

Activating virtual assistants (like Siri or Alexa)

Interacting with a user interface using only the user’s voice

Apps used by research participants

Research participants may use a speech recognition app that lets them interact with user interfaces – their operating system, software, and web pages – with their voice alone.

These apps include, among others:

Dragon NaturallySpeaking (a commercial product)

Voice Control (built into MacOS, iPadOS, and iOS)

Windows Speech Recognition (built into Windows)

Voice Access (built into Android)

Utterly Voice or Talon (open source)

How speech recognition apps work

Users can activate the app using a specific voice command, generally “Wake up” or “Start listening.”

For basic actions like closing a window or scrolling down a web page, they use built-in commands like “scroll down” and “close window.” (Exact commands vary by operating system and app.)

For more complex interactions (like pressing a button on a web page), users have a few options:

Use the accessible name of an interactive item to select it

Example: “Click ‘Start my application’” (on desktop) or “Tap ‘Start my application’” (on mobile) to click the “start my application” button.

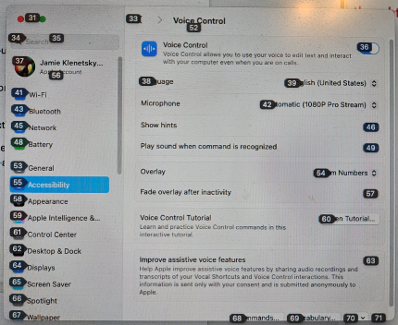

Use a “flag” or number overlay

A user tells the app to display flags (Typically “Show flags” or “Show numbers”).

A flag is assigned to each interactive element on screen.

The user selects the number associated with the element they want to activate.

Example: To click or tap on the “Voice Control” toggle button in the image below, a user would say “36,” “Click 36,” “Tap 36,” or a similar command (depending on the app).

macOS Voice Control settings

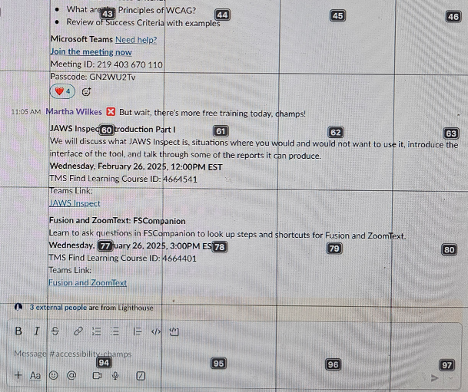

Use the number grid overlay

A user tells the app to display the number grid (“Show grid”).

The grid divides the screen into numbered squares.

Number grid overlay

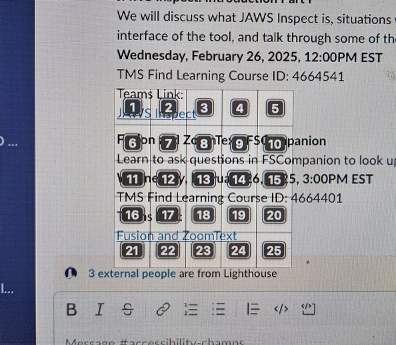

The user can keep “subdividing” the grid until they’re able to pinpoint the element they want to activate.

In this example, the user has “zoomed in” on square 77, and could now say “Click, 1” to select the “JAWS Inspect” link:

Zoomed-in number grid

Troubleshooting

Accessing Zoom meeting controls can be challenging for speech recognition app users. This section explains how to troubleshoot the most common scenarios.

Zoom toolbar is hidden

Issue: On desktop, the Zoom toolbar is hidden by default. This can make it difficult for participants to find the chat and share buttons.

Solution: Direct the participant to change the “Always show meeting controls” setting in the Zoom desktop client. (This is only possible from the desktop client, and not the meeting instance itself.) Voice commands are in italic:

Open the Zoom desktop client

Click on “Settings” (“Click ‘Settings’”)

Click the "Always show meeting controls" checkbox ( “Click ‘Always show meeting controls’”)

Click "Close" to exit the settings menu ( “Click ‘Close’”)

These commands might not work for every speech recognition app. In that case, instruct the participant to turn on the number grid, then give them the instructions (without the voice commands).

Can’t select the “Share screen” button

Issue: The participant may not know how to select the Share button or their desktop for sharing.

Solution: Tell them to use the following commands:

Activate the Share button: “Click ‘Share’” or “Tap ‘Share’”

If this doesn’t work, ask the user to select the button using flags or the grid.

Select a screen and start sharing on desktop:

Optional: “Click 'Share sound’” (if you want the user to share their system sound)

This command doesn’t work with every speech recognition app. If it’s not working, ask the participant to use flags or the number grid.

“Click ‘Share [desktop name]’” (for example, if the desktop was called "Desktop 1," say “Click Share ‘Desktop 1’”)

When the user wants to stop sharing, “Click ‘Stop Share’”

Select a screen and start sharing on mobile:

“Tap ‘Screen’” (this will select the screen for sharing)

“Tap ‘Share broadcast’” (this starts the share)

To end the broadcast, either:

End the meeting, or

Instruct the participant to turn on the number grid to access the necessary controls (“Tap ‘Stop’” and similar commands don’t work).

Can’t access chat / click on a link in chat

Issue: The participant may not know how to access the chat panel or click on links in the chat panel.

Solution:

The participant can say “Click ‘Chat panel’” on desktop or “Tap ‘Chat’” on mobile to open the panel.

Many desktop speech recognition apps aren’t able to access chat messages, including links.

If the participant has issues, instruct them to turn on the number grid to click on links in chat.

Help and feedback

Get help from the Platform Support Team in Slack.

Submit a feature idea to the Platform.