Handling flaky unit tests

Last Updated: January 29, 2026

This is a guide for handling unit tests that fail intermittently. A test fixture is a fixed state so the results should be repeatable. A flaky test is a test which could fail or pass for the same configuration. In monitoring the deploy of vets-website and vets-api, we often have to deal with flaky tests in a few specific situations:

A flaky test inside of a pull request

A flaky test in

mainwhen an auto-deploy is not nearingA flaky test in

mainwhen an auto-deploy is nearing

General guidance

A flaky test inside of a pull request

If a unit test fails in a pull request, re-run the test suite either by pulling in the main branch, or re-running the test job. This action is the responsibility of the pull request owner and has no effect on the daily deploy.

Vets Website guidance

A flaky test in main when an auto-deploy is not nearing

If a unit test fails in main and a deploy is not nearing (or has already happened for the day), the failure can be ignored as inconsequential. However, the pipeline should still be refreshed in order to tell if the test is flaky or legitimately failing. The relevant code owner should then be alerted so they can either skip or fix the test before the next deploy (at the discretion of the test owner).

A flaky test in main when an auto-deploy is nearing

If a unit test fails in main and a scheduled deploy is nearing, the Release Tools support team member should refresh the pipeline immediately, open up a pull request to skip the test, and alert the code owner for a fix and/or pull request approval to skip the test. Ideally the test gets fixed, but in reality, the process to merge can often take longer than is allowed for by the timing of the deploy. This is why it is important to have a pull request opened immediately to skip the test if needed - no need to wait for the code owner, delays can fail the deploy. This is the most common reason for a failed deploy so we should all be on high alert for it while on a support rotation.

As the pull request is running through the pipeline, the support engineer should keep refreshing the main pipeline just in case it catches and is successful to prevent a failed deploy. Even if the deploy is successful, the test should be either fixed or skipped as to not block future deploys.

Isolated application builds

You can view failed unit tests in the Unit Tests job output or the Mochawesome report in the Unit Tests Summary section of the workflow.

Steps to resolve failed unit tests:

Re-run the failed workflow. You can do so by opening the workflow of the failed commit from the commit status and re-running the failed jobs or re-running the entire workflow.

Merge a PR for skipping the unit test(s) if a fix can’t be merged within an hour.

Once the PR has been merged to either fix the issue or skip the unit test(s), verify that the new commit’s pipeline successfully completes.

If the daily production deploy needs to be restarted, you will need to notify the appropriate Release Tools Team member. For more information, view the Restarting the daily deploy documentation.

Vets API guidance

Failing test in master

When a PR merges, the test suite runs again on the commit (click on the ❌ for the commit found in the list of commits). Occasionally it will fail and 99% of the time, it’s due to a flakey test. If the test suite fails, the commit gets stopped in the pipeline and it won’t deploy until it passes its tests or a subsequent PR passes its tests. (See details on the deploy process here.) Follow these steps for failed code checks:

Click on the Details link for the Code Checks / Test.

To make sure it’s not something related to the commit, look for the failed test(s) in the output (or click the “Summary” button, then click the link in “For more details on these failures, see this check”).

If the failure isn’t related to the commit that merged, click the button to re-run the failed jobs.

If the failure is related to the commit, re-run the job just to make sure and if it’s reproducible, fix or revert the PR ASAP. Contact Backend support in #vfs-platform-support to expedite the PR review.

How to prevent flaky tests

Vets API tests run in parallel during CI. This is to make the tests run faster. A downside of this, however, is that tests can sometimes interact with each other. Some ways this happens is if Flippers are enabled and disabled directly in tests, or if a resource (file, PDF, etc.) gets deleted prematurely.

Testing locally

Before committing new specs, run the entire test suite locally a few times in parallel. There are instructions in these docs. Alternatively, follow instructions in the binstubs docs. At the very least, run a subset of the tests in parallel. For example: bin/test modules/claims_api --parallel.

Specs with Flippers

Don’t call Flipper.enable(:feature_flag) (or disable) in a spec. Instead, mock the call: allow(Flipper).to receive(:enabled?).with(:feature_flag).and_return(true). Directly enabling and disabling the flipper affects global state and with thousands of tests running in parallel, this can have unintended side effects. (This same guidance is used in an example here.)

Specs dealing with PDFs

When a file is created and deleted within the context of a test, this can cause issues if tests run in parallel and the file name is not unique. One test can delete another test’s file, returning an Unable to find file error. Appending or prepending the file name with a unique id can solve this problem, but make sure it’s working as designed and the id is not nil.

How to debug a flaky spec

Automated steps

If you have binstubs set up locally, you can run the command bin/flaky-spec-bisect <github-actions-url-or-run-id>. That automates most of the manual steps listed below and lets you skip to step 8. See the Binstubs setup docs for usage details.

Manual steps

The outcome of these steps is that you’re able to reliably reproduce the flaky spec locally. These instructions are specifically for VS Code, but can be modified for other editors.

Click on your failing code check, then

Summary. Scroll down to theArtifactssection.Download

Test Results Group Xcorresponding to the failing test group.Open in VS Code.

Note the seed value in the file:

<property name="seed" value="xxxxx"/>Transform the xml into a file you can run with an rspec command by following these steps:

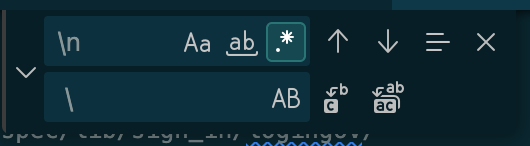

Command F to search within the file and select the

.*option to use Regular Expression.Paste

\./.+?\.rbin the search bar. It'll look like this:

option-returnselects all highlighted files. Command C to copy them.Paste in a new file (doesn’t matter where the file lives).

Command-shift-P → search for and select “Delete Duplicate Lines” to delete duplicate lines.

Do a find-and-replace (using the same Regex search in the new file). Replace

\nwith ` \` so newlines are replaced with a space and a slash.

Find and replace within the new file

Now, all lines are separated by a space and can be run with a single rspec command. Copy the file contents.

In your root directory of vets-api, run

bundle exec rspec --seed <seed_value> --bisect <copied filepaths>. This will tell you which test causes another one to fail.❌ If you see this error:

Running suite to find failures...objc[72370]: +[__NSCFConstantString initialize] may have been in progress in another thread when fork() was called.runexport OBJC_DISABLE_INITIALIZE_FORK_SAFETY=YESand try again.

Once the bisect is complete, it will give you a “minimal reproduction command.” This shows which spec starts the issue (even though it might pass) and which spec fails because the original spec caused a problem. In other words, the failing spec will ONLY fail if the first spec is run before it. Otherwise it will pass. This means the first spec is causing a flaky spec.

You can reproduce the failure locally now by running

bundle exec rspec <minimal reproduction command>

Help and feedback

Get help from the Platform Support Team in Slack.

Submit a feature idea to the Platform.