Best practices for QA testing

This document describes the Platform’s recommended process for QA testing.

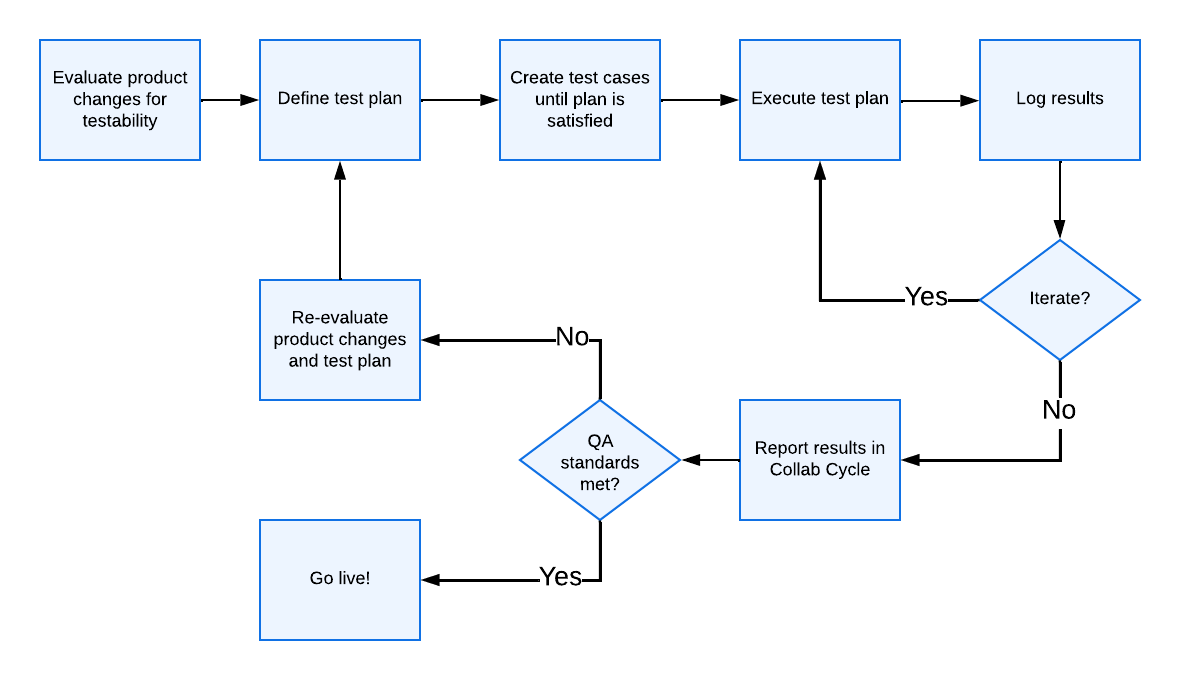

Recommended QA testing process flow

Determine what type of testing your product needs

The type of testing your product needs depends on the what type of changes you’re making. Use the criteria listed below to help determine what type of testing is required.

Front-end unit test - always write unit tests, and aim for 80% coverage!

Back-end unit test - always write unit tests!

End-to-end test - always create at least one spec that verifies the happy path of your product then create additional tests for other high impact user journeys

Manual functional test - if you are creating new functionality in your product then it is prudent to execute targeted manual functional testing for each new function

Exploratory test - if time permits, set aside 30 minutes to conduct testing in a

preview environmentorstagingat the end of the sprint where you integrate new functionality, and before the change is released toproduction508/Accessibility test - always conduct accessibility testing and use automated and manual test

User acceptance test - if the stories your team is integrating into the product were derived from stakeholders with continued involvement in the product, then facilitate user acceptance test sessions with those stakeholders in order to validate the acceptance criteria have been completed

Create a test plan

A test plan is a collection of test runs that make it easier to create multiple runs at once. Use the Description field for your test plan to describe the changes and reference the relevant product outline and user stories.

Please note that you will likely need access to TestRail in order to access many of these artifacts. See “TestRail access” under Request access to tools: additional access for developers for information on getting access.

Example TestRail Test Plan for reference

Create test cases

Use TestRail to create test cases as you build. Be sure to link to the relevant user story in the Reference field of the test case.

Sample Test Case Collection for reference

User stories

A single user story may require several test cases to provide full coverage. See example scenarios below.

User Story: As a VA.gov user, I need to be able to check for existing appointments at a VA facility. | ||

|---|---|---|

Scenario 1 | Auth on Appointment Page with appointment |

|

Scenario 2 | Auth on Landing Page with appointment |

|

Scenario 3 | Auth on Landing Page without appointment |

|

Execute tests

Once you’ve created your test cases and a test run, you’ll want to execute the test through the TestRail interface.

Log results

As you execute your tests, you can use TestRail to mark each step as pass or fail. In addition, when you encounter a failing step you should log the failure as a defect in the form of VA.gov-team GitHub issue and link the defect to the step and test case that failed. If you log your results as you execute your test plan you can achieve strong traceability between the execution of tests and the defects discovered during execution.

Report results

After you’ve created a test plan, linked references, executed the test plan, and linked defects where appropriate; it’s time to report the results of your QA testing efforts to stakeholders. TestRail includes several built-in templates that can be used for reporting.

We require specific QA reports at certain points in the development process as part of the collaboration cycle. For a detailed description of the Platform’s QA requirements, see Platform QA Standards.

Help and feedback

Get help from the Platform Support Team in Slack.

Submit a feature idea to the Platform.