QA standards

Last Updated:

Overview

This page outlines the QA Standards VFS teams need to meet to launch a product on VA.gov through the Collaboration Cycle. The goal of these QA standards is to make sure that the VFS product teams have met business goals, and that the product’s functionality behaves as intended.

VFS teams must provide QA artifacts showing they meet the QA Standards. The Governance Team will review these artifacts at the Staging Review touchpoint to determine if the product meets the standards.

Some QA Standards are launch-blocking. If any launch-blocking standard is not met, the product cannot launch on VA.gov.

All QA standards must be addressed. Teams should check every box for QA artifacts at staging review. If a standard doesn't apply to your product, provide a brief explanation why.

QA artifacts are required for Staging Reviews. Teams must provide artifacts 4 business days before the scheduled Staging Review, or the Governance Team will cancel the review and it will need to be rescheduled.

Exceptions

Static pages (pages with content only from Drupal) don't need to meet QA Standards since they don't have dynamic content requiring extensive QA testing.

VA.gov QA Standards

Please note: These standards will likely evolve over time as tooling evolves and processes mature.

Standard area | ID | Standard description | Severity |

|---|---|---|---|

Regression Test Plan | QA1 | The product must have a regression test plan that proves the new changes don't break previously-integrated functionality. | Launch-blocking |

Test Plan | QA2 | The product must have a test plan that describes the method(s) that will be used to verify product changes. | Launch-blocking |

Traceability Reports | QA3 | The product must have a Coverage for References report that demonstrates user stories are verified by test cases in the test plan. The product must also have a Summary (Defects) report that demonstrates that defects found during QA testing were identified through test case execution.k | Launch-blocking |

E2E Test Participation | QA4 | The product must have 1 or more end-to-end (E2E) tests. | Launch-blocking |

E2E Tests - Best Practice Adherence | QA9 | E2E tests must follow Platform best practices for writing tests. | Launch-blocking |

E2E Test Execution Time | QA10 | All E2E test files for the product must complete execution in under 1 minute. | Launch-blocking |

Unit Test Coverage | QA5 | The overall product must have 80% or higher unit test coverage in each category: Lines, Functions, Statements, and Branches. | Launch-blocking |

Unit Tests - Best Practice Adherence | QA11 | Unit tests must follow Platform best practices for writing tests. | Launch-blocking |

Endpoint Monitoring | QA6 | All endpoints that the product accesses must be monitored in Datadog. The team must complete a playbook that specifies how the team will handle any errors that fire. | Launch-blocking |

Logging Silent Failures | QA7 | Product teams must verify that they have taken steps to prevent all silent failures in asynchronous form submissions or state why this standard is not applicable. | Launch-blocking |

PDF Form Version Validation | QA8 | Updated digital forms must use the most current, officially approved PDF submission version to ensure accuracy, compliance, and uninterrupted processing. | Potentially launch-blocking - See detailed description below |

No Cross-App Dependencies | QA12 | Applications must be built in isolation with no cross-app dependencies, excluding static pages and platform components. | Launch-blocking |

How standards are validated

TestRail is the preferred tool for QA artifacts. You will need access to TestRail to create and view these artifacts. See TestRail access under Request access to tools: additional access for developers for information on getting access.

While teams may use other tools, TestRail provides the most consistent format for reviewers. If you choose not to use TestRail, you must still provide all required information in a clear, structured format (see examples below for requirements).

Regression Test Plan

A Regression Test Plan verifies that new changes don't break existing features. It validates that functionality which existed before your team's work remains intact after your changes.

For new features, include tests that verify:

Users can navigate through VA.gov to reach the new feature

Existing VA.gov pages remain unaffected by the new feature

Test case requirements:

Each test case must include:

Clear login steps (if applicable)

Navigation steps with exact URLs or click paths

Specific input values to enter

Expected results for each step

Pass/fail criteria

Example test case format:

Test Case: Verify Form Submission

Navigate to va.gov

Click "Sign in" and log in with test user credentials

Navigate to /example-form-path

Enter "Test" in First Name field

Enter "User" in Last Name field

Expected: Form accepts input and shows no validation errors

Click "Continue"

Expected: User advances to next page of form

Creating in TestRail: Create a Regression Test Plan under the Test Runs & Results tab. For an example, see the VSP-Facility Locator regression plan.

Alternative format: If not using TestRail, provide a Markdown file, spreadsheet, or other format that includes the test description, steps, expected results, actual results, and pass/fail status.

Test Plan

A Test Plan formally describes how you'll verify new or changed product features. Consider both new features and potential impacts to existing features when creating your plan. Execute the plan and track results.

Test case requirements: Follow the same quality standards as outlined in the Regression Test Plan section above.

Creating in TestRail: Create a Test Plan under the Test Runs & Results tab. For an example, see the Cross-Browser Search test plan.

Alternative format: If not using TestRail, provide documentation that includes the same test case requirements listed in the Regression Test Plan section.

Traceability Reports

Traceability Reports consist of two required components:

Coverage for References

Shows what percentage of user stories are covered by test cases in your Test Plan. This verifies that all requirements have corresponding tests.

How to generate in TestRail:

Navigate to the Reports tab

Click "Coverage for References" in the Create Report panel

Select your test suite and requirements

Generate report

Example:

Alternative format: Provide a document showing each user story ID mapped to its test case IDs, with a total coverage percentage.

Summary (Defects)

Shows what percentage of defects were found by executing Test Plan test cases, and which defects are resolved or outstanding.

How to generate in TestRail:

Navigate to the Reports tab

Click "Summary (Defects)" in the Create Report panel

Select your test runs

Generate report

Example:

Alternative format: Provide a defect summary that includes total defects found, defects found through test execution, and resolution status for each defect.

E2E Test Participation

Teams must create at least one Cypress test spec in a tests/e2e folder in the product's directory following conventions in Writing an end-to-end test.

Required artifact: Provide a direct link to your E2E test file or folder in GitHub (e.g., https://github.com/department-of-veterans-affairs/vets-website/tree/main/src/applications/[your-app]/tests/e2e).

E2E Tests - Best Practice Adherence

End-to-end tests must follow Platform best practices for writing tests. This ensures tests run efficiently and effectively validate your application's functionality.

Required artifact: Provide a link to your E2E test files in GitHub and note any exceptions.

E2E Test Execution Time

All E2E tests for your product must complete in under 1 minute. Long-running tests cause Cypress performance issues and slow down the CI pipeline.

If your tests exceed 1 minute: Break long tests into smaller, focused tests. For example, a single 6-minute test should be split into 6 separate tests. Each smaller test will run faster and more reliably than one long test due to how Cypress handles memory and performance over time. Use save-in-progress (SiP) functionality or other approaches to break apart multi-step form tests. Also work to optimize your tests by reducing unnecessary waits, combining related assertions, and following E2E best practices.

Required artifact: Verify your E2E tests execute in under a minute. You can find this data in the DOMO E2E Test Execution Time dashboard or in a CI run’s Cypress Tests step. State “E2E tests execute in under 1 minute” on the artifact checklist for staging review.

Unit Test Coverage

Products must have at least 80% unit test coverage for front-end code in each category: Lines, Functions, Statements, and Branches.

Required artifact:

The exact command used to generate coverage from the

vets-websiterepository.Or screenshot, text, or HTML output showing coverage percentages.

How to generate coverage:

Run this command from the vets-website repository:

yarn test:coverage-app {app-name}

# Or if your app is in a subfolder, use the --app-folder parameter.

yarn test:coverage-app --app-folder parent-directory/child-directoryNote: The Unit Test Coverage Report may show outdated information. Always verify coverage by running tests locally at the time of staging review.

Unit Test - Best Practice Adherence

Unit tests must follow Platform best practices for writing tests. This ensures tests run efficiently and effectively validate your application's functionality.

Required artifact: Provide a link to your unit test files in GitHub and note any exceptions.

Endpoint Monitoring

All endpoints that the product accesses need to be monitored in Datadog. Monitors should be configured according to the guidance in Endpoint monitoring. To satisfy this standard, teams must complete a playbook that details how they will respond to any errors that fire.

Minimum requirements to meet this standard

End point monitoring must cover the three fundamental types of problems:

Unexpectedly high errors

Unexpectedly low traffic

Silent failures (silent to the end user) of any kind

PII and PHI must never be saved in logs.

Errors that appear in Datadog should be routed to a Slack channel. (You should name this channel something like #(team name)-notifications).

The Slack channel should be monitored daily by the VFS team.

The team should establish a process for acknowledging and assigning errors to a team member to investigate and resolve.

Behaviors to avoid

Do not silence errors if there are too many. Instead we expect you to tune monitoring thresholds to be useful.

Do not assign monitoring to one person.

Logging Silent Failures

A silent failure happens when a user takes an action that fails, but they don't receive any notification about the failure.

Applicability

This standard applies only to products using asynchronous submission methods. If your product processes submissions immediately (synchronously), state this as the reason why this standard doesn't apply.

Standard Requirements

To satisfy this standard VFS teams must:

Option 1 - No Asynchronous Form Submissions

State "This product does not use asynchronous form submissions" as your justification

Option 2 - Asynchronous Form Submissions

Direct links to code lines that log silent failures to StatsD. Follow guidelines in the silent failures guide for metrics implementation.

Link to a Datadog dashboard showing your StatsD metrics.

If using VA Notify for action-needed emails, provide links to code showing:

VA Notify callback usage

Use of

silent_failure_avoidedtag (notsilent_failure_no_confirmation)

PDF Form Version Validation

Some forms are submitted by generating a PDF, while others are submitted online but fall back to a PDF if needed. The PDF used should always be the latest version available from the official Find a form page. PDF forms usually expire every three years and must be updated. When a new PDF is released, the old version can be used for up to one year, giving teams time to update their online forms.

For digital forms, this standard checks the expiration date of the generated PDF compared to today's date. Depending on how long the PDF has been expired for, this could be launch-blocking.

Applicability

This standard only applies to forms that:

Use a generated PDF as their main way to submit a form

Use a generated PDF as a backup method if online submission isn’t available

Standard Requirements

If the PDF has been expired for 10 months or more:

The team is blocked from launching to VA.gov

The team must update the PDF to match the current version before launch

This is logged as a launch-blocking finding via QA standards

If the PDF has been expired for 6 to 9 months:

The team can launch but receives a warning that the form must be updated within 1 year of expiration

This is logged as a finding via QA standards

If the PDF has been expired for 5 months or less, or the PDF is not expired:

The team is allowed to launch without restrictions

If it is expired, the team is notified, but it is not logged as a finding

No Cross-App Dependencies

Applications must be built in isolation. Your app should not import or depend on code from other applications. This excludes static pages and shared platform components.

App isolation is required for the platform to move away from the daily deploy process. Cross-app dependencies create fragile builds and unexpected failures.

Required artifact: Confirm your app has no cross-app dependencies. You can verify this using the Cross-App Import Report. State “No cross-App dependencies” on the artifact checklist for staging review.

FAQ

Do VFS teams need to create new test plans, unit tests, and end-to-end tests for each trip through the Collaboration Cycle?

No, teams don’t need to create a specific test plan for every iteration they make to a product they bring through the Collaboration Cycle. We ask teams to provide updated testing artifacts that reflect the changes they're making to their product. Small changes don't require a full test plan.

Overall unit test code coverage must remain at least 80% after your changes.

The end-to-end test standard should also be met.

When should VFS teams complete and submit their QA artifacts for review?

Complete and link all QA materials and artifacts to the Collaboration Cycle GitHub ticket 4 business days before your scheduled Staging Review. The GitHub ticket has a specific section for linking these artifacts.

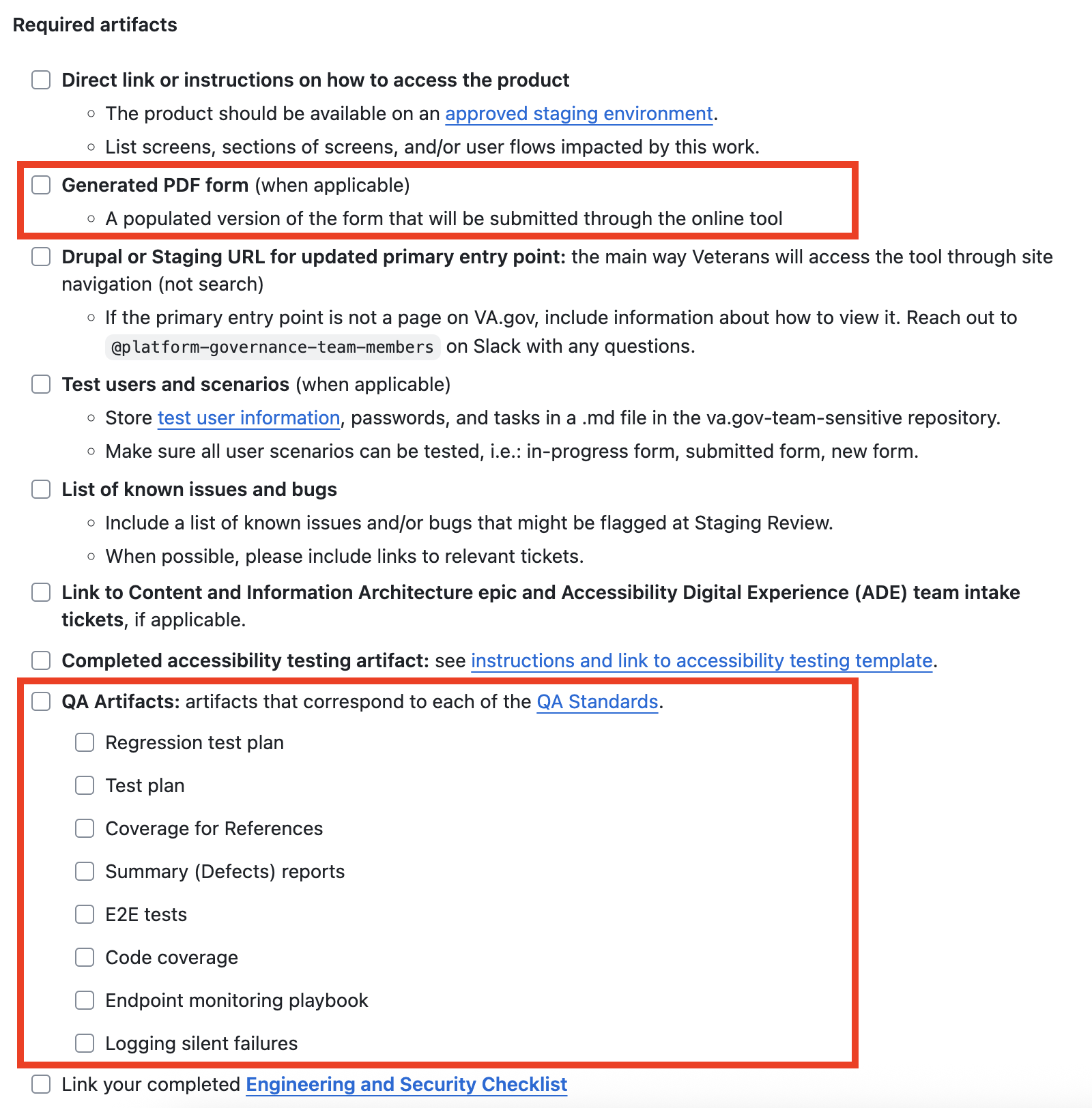

Screenshot of a Collaboration Cycle request ticket in GitHub with the QA artifacts highlighted.

Are VFS teams required to use TestRail?

TestRail is the preferred tool for QA artifacts because it provides consistency for reviewers. While teams may use other testing tools, they must still demonstrate that all QA Standards are met with the same level of detail that TestRail provides. See the alternative format requirements under each standard for guidance.

Are Regression Test Plans required for a new product?

Yes. Even though the product may be new to VA.gov, the product being launched may not be totally new. The goal of the regression test plan would be to ensure that the product is still meeting the requirements of the legacy product. For example, a digitized form on VA.gov should meet the requirements of the paper form.

For new features, your regression tests should verify that users can navigate to the new feature and that existing pages remain unaffected by the new feature.

What if a QA standard doesn't apply to my product?

If a standard doesn't apply to your product, you must provide a brief explanation in your staging review artifacts. For example, if your product doesn't use asynchronous submissions, explain this when addressing the Logging Silent Failures standard. Every standard must be addressed, either with the required artifacts or an explanation of why it's not applicable.

Help and feedback

Get help from the Platform Support Team in Slack.

Submit a feature idea to the Platform.