Test Stability Review

Background

Test flakiness is defined as automated testing that does not consistently pass or fail. A flaky test may come into being from a poorly written test, an unreliably functioning piece of code, or numerous other reasons. Test flakiness has many negative effects on our product. The most important of these, is that we don’t reliably know if all our code is working as intended for the end user experience. Additionally, flaky tests cause huge delays in our development process, both on individual branches as well as production deploys. If tests fail during a production deploy, this will result in the deployment not taking place and typically is followed by hours of an engineer trying to rerun workflows among other troubleshooting. The thought was that resolving this would lead to less engineering time wasted while simultaneously ensuring we are providing a premium experience to our end users.

Motivation

Platform website contains documentation that outlines appropriate proper ways to test for flakiness such as running a test in a loop, but no way to enforce that this documentation is used unless a support request is filed. This lead us to thinking that we could automate something similar to this recommended practice and create some follow-up behaviors around it that would not be up to the individual engineer to implement, however would be up to the individual engineer (or their team) to ensure that their product is truly production-ready.

Solution

We needed a solution that would cover both E2E tests as well as Unit tests, as both suites exhibit flakiness. The solution was targeted at a few objectives:

Routinely stability-check the full test suite

Remove blockers from the development cycle

Prevent new issues from being introduced.

The solution centers around a list that is stored in BigQuery (one list for each test type). The tables are named vets_website_unit_test_allow_list and vets_website_e2e_allow_list respectively. Each table contains the file path of every spec file, an allowed status of true or false for whether or not it is allowed to run, and then three additional columns that only get populated under failure conditions. These failures would take place during an overnight stress test that runs each respective test suite 20x. If a test cannot reliably pass 20x in a row, it will be considered flaky and have its allowed status adjusted to false on the list. Additionally there are columns for titles and disallowed_at. These will only be filled out with values if a test has been set to false under the allowed column. The titles column represents the title of the test that failed in that spec. The disallowed_at value represents a date and time of when the test initially failed a stress test. When a test has its allowed property set to false, it will be skipped in normal CI runs of the test suites, to avoid blocking engineers unrelated to that test.

https://github.com/department-of-veterans-affairs/vets-website/actions/runs/6256314195

https://github.com/department-of-veterans-affairs/vets-website/actions/runs/6294030251

Once a test has been disabled, it can be reenabled automatically by making improvements to the test itself or related application code that will help it pass. When a GitHub pull request is submitted, all tests in the shared application directory as any of the changed files will be stress tested as part of CI. This stress test will only be the limited set of tests related to the changed files. If a previously disallowed test passes this stress test, it will be reenabled on the spot. If a related test is run and fails at this step, it will be disallowed during this CI check. If a new test spec is added, this will also fall under this same process. This will ensure that all tests exhibiting any flakiness are swiftly removed from the main CI test runs.

Example of Stress Tests running in CI

What Happens When a Test is Disallowed?

When a test is disallowed, it will be skipped in its respective CI jobs to avoid blocking other engineering workflows that are not related to the . However, for the team that’s impacted, there will be implications that start preventing further work on the application.

While a test is disallowed from running, no code merges to that directory will be permitted until the test is fixed. For example: if a test is failing and the full test is commented out, or skipped, this will NOT clear this notice, as the test will still be flagged as flaky on the allow list. This blocking will be done at the root folder just after the applications directory. For example:

If a test spec at the file path src/applications/appeals/995/tests/995-contact-loop.cypress.spec.js was disallowed, then all commits to src/applications/appeals would be blocked from merging their code until the test has cleared the stress test in their CI run.

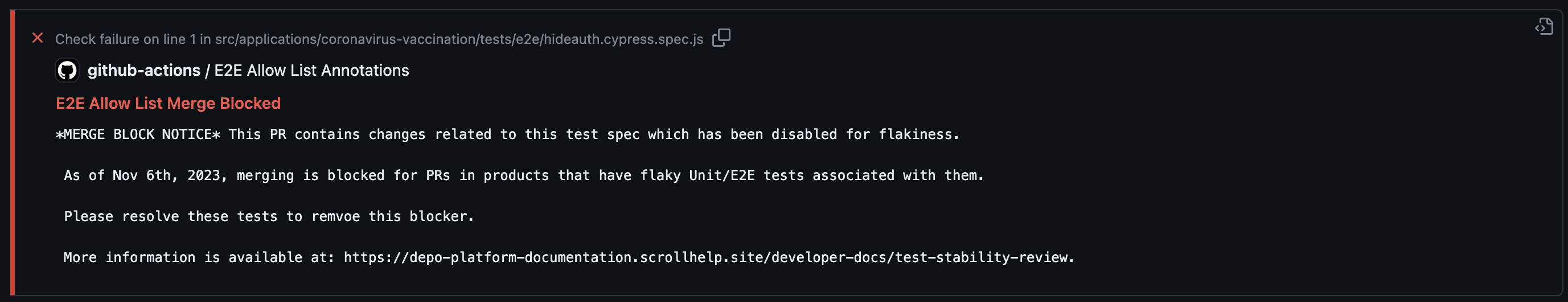

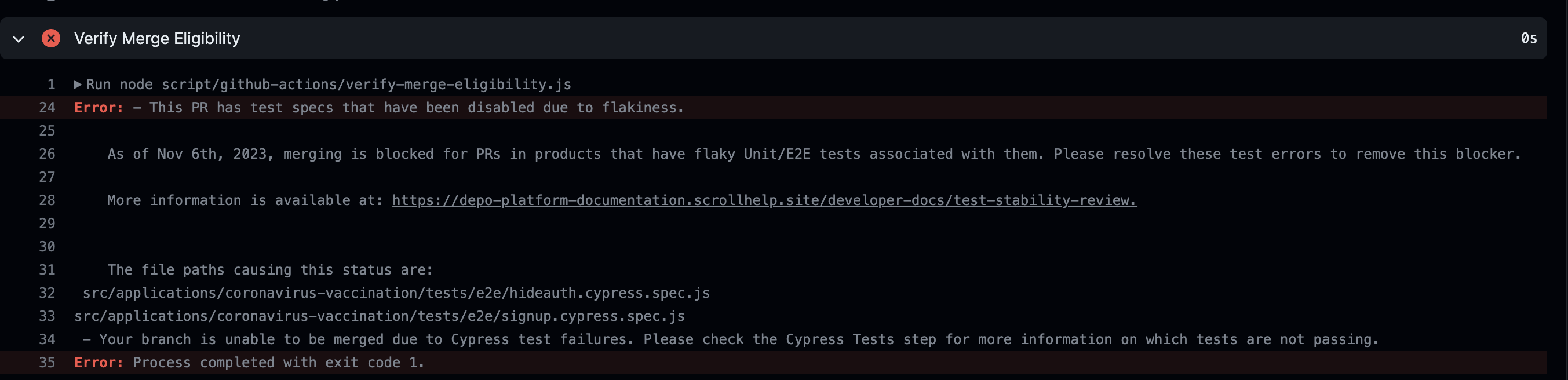

Example of E2E blocked merge annotation

Example of blocked merge CI output

Any tests that are disallowed will result in the immediate blockage of any further updates to an app unless it results in the test passing a stress test. The check for merge eligibility will be made after all stress tests are run in CI for that commit, so if a commit contains a fix for the disallowed test, it will be cleared before that check is performed.

Additional Notes

The allow-list-notify.yml and allow-list-publish.yml files in the qa-standards-dashboard-data repo contains workflows that automate two features that will be run at 9am eastern time M-F.

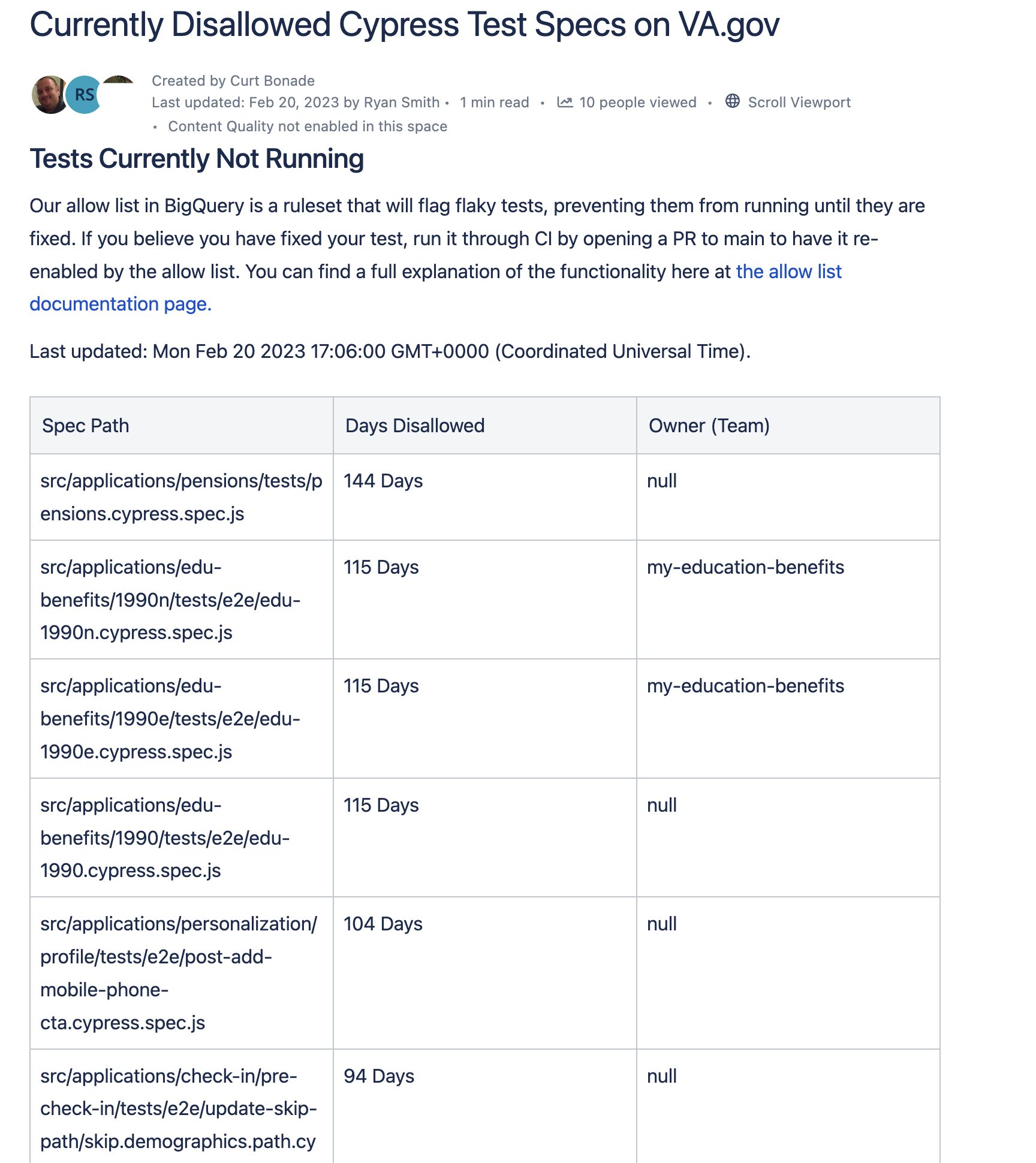

Confluence/Platform Website page

The first workflow is located here. This creates a page in confluence which publishes a page in Platform Docs during our platform’s regularly scheduled publishing. It contains a list of test specs that are currently disallowed, along with how many days they have been disallowed for and if able to be determined, a team that is designated as the owner of that test spec. This workflow runs M-F, once daily.

Example of the list of disallowed E2E tests on platform website

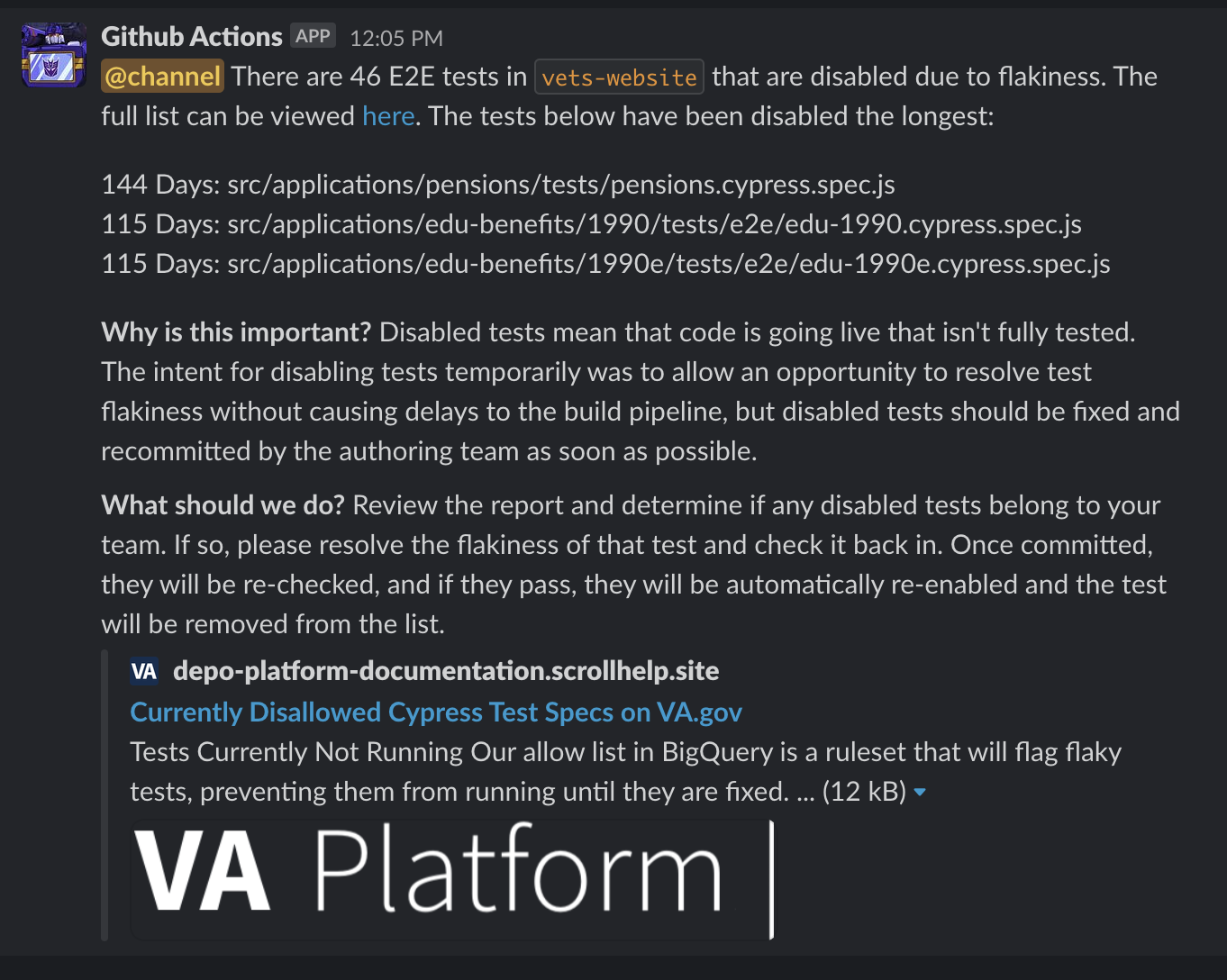

Slack Notification

Also, a Slack notification fires off in the #vfs-all-teams channel, that delivers this information directly to a source where QA issues can be followed up on if necessary. This notification fires off every Monday and calls extra attention to the three test specs that have been disabled the longest.

#vfs-all-teams notification example

Help and feedback

Get help from the Platform Support Team in Slack.

Submit a feature idea to the Platform.